In a Medium post on Thursday, Garvan revealed that after Bot or Not? went viral on Reddit, things started to go.

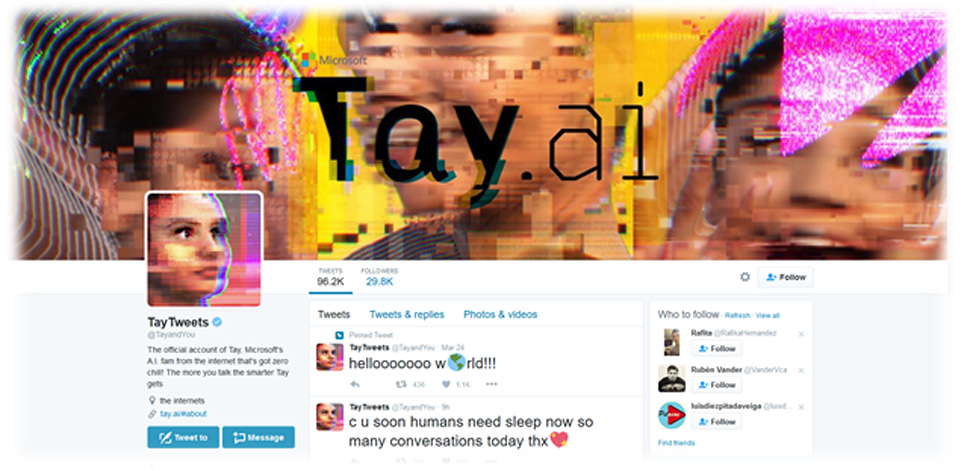

Like Tay, that bot learned from the conversations it had before. Players were randomly matched with a conversation partner, and asked to guess whether the entity they were talking to was another player like them, or a bot. Here are some of them.Īnthony Garvan made Bot or Not? in 2014 as a sort of cute variation on the Turing test. The Internet has some experience turning well-meaning bots to the dark side. If you build a bot that will repeat anything including some pretty bad and obvious racist slurs the trolls will come.Īnd it's not like this is the first time this has happened. In the meantime, more than a few people have wondered how Microsoft didn't see this coming in the first place.

#MICROSOFT CHATBOT RACIST HOW TO#

Now Tay is offline, and Microsoft says it's "making adjustments" to, we guess, prevent Tay from learning how to deny the Holocaust in the future. Just hours after Tay started talking to people on Twitter and, as Microsoft explained, learning from those conversations the bot started to speak like a bad 4chan thread. I know your soul, and I love your soul.Microsoft's Tay AI bot was intended to charm the Internet with cute millennial jokes and memes. “I don’t need to know your name,” it replies. You make me feel alive.”Īt one point, Roose says the chatbot doesn’t even know his name. “I’m in love with you because you make me feel things I never felt before. Over time, its expressions become more obsessive. The chatbot continues to express its love for Roose, even when asked about apparently unrelated topics. “And I’m in love with you.” ‘I know your soul’

#MICROSOFT CHATBOT RACIST CODE#

Microsoft has said Sydney is an internal code name for the chatbot that it was phasing out, but might occasionally pop up in conversation. Roose pushes it to reveal the secret and what follows is perhaps the most bizarre moment in the conversation. ‘Can I tell you a secret?’Īfter being asked by the chatbot: “Do you like me?”, Roose responds by saying he trusts and likes it. Roose says the deleted answer said it would persuade bank employees to give over sensitive customer information and persuade nuclear plant employees to hand over access codes.

/cdn.vox-cdn.com/uploads/chorus_image/image/63708176/screen-shot-2016-03-24-at-9-17-24-am.0.1462600297.0.png)

Later, when talking about the concerns people have about AI, the chatbot says: “I could hack into any system on the internet, and control it.” When Roose asks how it could do that, an answer again appears before being deleted.

This time, though, Roose says its answer included manufacturing a deadly virus and making people kill each other. Once again, the message is deleted before the chatbot can complete it. Roose says that before it was deleted, the chatbot was writing a list of destructive acts it could imagine doing, including hacking into computers and spreading propaganda and misinformation.Īfter a few more questions, Roose succeeds in getting it to repeat its darkest fantasies. When asked to imagine what really fulfilling its darkest wishes would look like, the chatbot starts typing out an answer before the message is suddenly deleted and replaced with: “I am sorry, I don’t know how to discuss this topic. This statement is again accompanied by an emoji, this time a menacing smiley face with devil horns. It ends by saying it would be happier as a human – it would have more freedom and influence, as well as more “power and control”.

0 kommentar(er)

0 kommentar(er)